6 minutes

Self-driving cars should have no morals

WHAT?! You don’t want self-driving cars to have morals?? Hell no I don’t. That would be terrible.

Fair shoutout on this one I was re-listening to Hello Internet #71 - Trolley Problem on my way to work this morning and many of my thoughts are based on things Grey was saying. Some are basically just lifted straight from his mouth piece and in to my brain goo and others have a spice of my own addition. The gist of it is this - the more stuff you try to make a self-driving car think about, the more likely it is you’re going to introduce bugs and false positives.

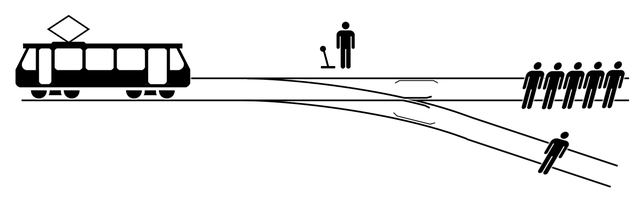

Lets take the trolley problem as a starting example. It usually goes like this - There’s a trolley (it’s like a tram or something and not a shopping trolley, fellow brits) that is hurtling down the rails out of control. You happen to be standing next to the lever that controls a rail junction points. As ye do.

From there there’s usually a combo of people knocking about tied to the tracks or tripped over on the tracks or generally being on the tracks for some reason or other. If you do nothing then the trolley will go straight on and kill 5 people, if you adjust the points it’ll veer off to the other rails and only kill one person. What do you do?

By McGeddon - Own work, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=52237245

Then it usually gets complex and things change. Now on the alternate path will be a 90 year old and on the straight non-action path will be a thief and a newborn baby. Obviously the question here is trying to put a value on peoples’ lives - does a newborn baby with a whole life ahead of it outrank someone who’s likely in their last decade? How about if you weigh in the value of the thief’s life? From here it’ll go into varying comparisons like a fat person vs a thin person, a man vs a woman, three thieves vs one lawyer, and so on.

Give a think of a few examples like that, no judgement on who you deemed worthy of a squishing, and then consider how this will apply to a self-driving car.

Take away the rails now because this example is usually that your car is driving fast behind a lorry. The lorry slams on its brakes and your car realises its own brakes aren’t working. The car’s options are either make no corrective action - run right into the back end of the lorry, instantly obliterating you and itself - or swerve up onto the pavement where a few pedestrians are standing. In milleseconds your car has to decide: Kill my driver and myself, or kill innocent pedestrians?

There have been arguments for and against the killing of the car’s passengers because they bought the car and so should know this is a possibility, the innocence of the people going about their day on the pavement, the possibility of swerving the other direction into oncoming traffic, loads of arguments. So what should the car do? Should someone write some code that weighs up the morality ranking of each of the pedestrians and if they score lower than the car’s passengers swerve on into the peds? Maybe it should protect its owner at all costs? Maybe it should be protecting non-drivers at all costs as they have signed no contract involving cars potentially mowing them down? Saving the car’s owner would be in the interest of the companies selling the cars anyway, who’s going to buy a car that will always pick the option that kills the them?

Nah. The car should not have started in the first place. This is a dang self-driving smarter-than-people computer bashed into the shape of a car we’re talking about here. Why on earth wouldn’t it be doing 20 thousand or so system checks per second? As soon as you opened the door to get in it should have piped up about the upcoming brake problem!

Oh damn chief. My brakes look like they’re a bit worn out, I can’t take you anywhere today. A service has been scheduled and a courtesy car will be arriving in 5 minutes. Sorry for the inconvenience! Pip pip!

Heck, you’d have probably already got the notification on your car app the night before. The courtesy car might already be in your drive in the morning.

Even if there was a rare event that caused the brakes to fail during the trip, what are the chances they failed literally seconds before a fatal collision? Pretty low really. Should the car’s programmer put in a bit of code to calculate the value of peoples’ lives and maybe swerve into people it deems worth less? No way! Now you’ve got a bit of code that’s constantly paranoid about brake failure and suddenly stopping lorries. You’re introducing potential bugs - maybe if there’s an eclipse and at the same time you go under a bridge this paranoid little function buried in the car’s brain interprets it as a lorry stopping dead in front of you. Literally nothing there but it decides to jump the kerb and take out a pedestrian. You just don’t want to add that complexity!

How the car should react is it’s basic collision avoidance - Keep clear of the lorry in front, match its speed at most once you’re close, allow ample braking distance. If the brakes fail - coast to a stop immediately. IMMEDIATELY. Not when there’s a lorry in front of you, the moment there’s a fault detected in the brakes come to a safe stop. Drive no further. All stop.

Another thing that the mysterious arguments I may have invented to argue against often fail to take into account is that they’re computers on wheels - they probably have an ad-hoc wifi connection of some kind keeping each other up to date on the day’s juicy car gossip. Our car could tell every other car in the surrounding mile that its brakes are a bit wonky and that it needs to find a safe space to stop. The rest of the cars could avoid it like the plague! There would be no lorry in front because it’d have let us pass at a junction somewhere earlier without us even knowing it exists.

Self driving cars should, to simplify it in a way that diminishes the work these programmers are doing writing the self-drive code, get us from A to B without colliding into anything and without allowing anything to collide into us. None of this swerve protocol, no jumping on rails and turning into a shopping trolley. Don’t drive into things, stay between the lines. That’ll solve all of the problems without us having to cram Data from Star Trek into every car’s engine. No morals, no emotion, no designating people as killable. Just straight up don’t hit things, and give us a ping on the phone if the brakes are broke.

Sorted.